INTRODUCTION

The integration of artificial intelligence (AI) is rapidly transforming organizational operations, enhancing efficiency, fostering innovation, and sharpening competitive advantages. AI’s capabilities extend from automating routine tasks to providing sophisticated data analytics, heralding a revolutionary shift in business practices. However, this widespread adoption also introduces significant risks, particularly concerning the handling of sensitive data and the potential for employee misuse.

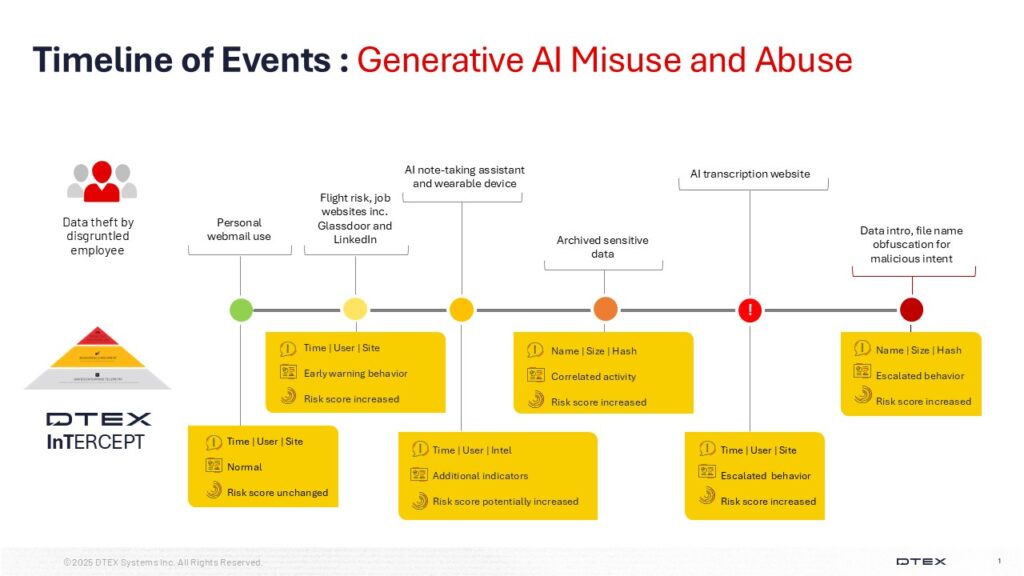

This investigation focuses on a disgruntled employee who is exploiting a new data exfiltration method using AI note-taking tools through their personal account. Such activities are particularly challenging to detect, as data aggregation and exfiltration take place within a cloud-based service. Additionally, since the use of personal accounts is currently allowed within the organization, this provides the employee with plausible deniability regarding any wrongdoing.

Organizations are increasingly investigating the diverse applications of this technology, with many inquiring, “How is GenAI being used?”. In this Insider Threat Advisory (iTA), we will explore the associated risks, examine the various technologies involved, and discuss effective monitoring and detection strategies to ensure optimal implementation.

DTEX InTERCEPT™ INVESTIGATION AND INDICATORS

A recent investigation revealed that an individual was using AI note-taking assistant tools to exfiltrate sensitive company data. This individual was part of the finance team and had been hired for a remote position at a banking and investment firm. The investigation encompassed both cyber indicators from the individual’s endpoint and numerous in-person interviews with colleagues and their manager. A notable discovery involved AI-generated audio files that were reportedly produced to secure a more favorable severance package.

The timely identification of this misconduct occurred “left of boom” (meaning pre-incident), allowing the organization to contain the majority of the data exfiltration and initiate legal proceedings to further reduce any residual risk.

This detailed investigation walkthrough and accompanying indicators are classed as “limited distribution” and are only available to approved insider risk practitioners. Login to the customer portal to access the indicators or contact the i³ team to request access.

In our customer-facing version, we detail our investigation, beginning with the initial alert. The disgruntled employee demonstrated both technical skill and intelligence by aggregating data using Generative AI note-taking tools, both on and off corporate devices. They exfiltrated this data by employing their personal account for these cloud-based services.

A surprising revelation emerged during a joint investigation: the disgruntled employee had introduced an AI-generated voice recording of their manager making discriminatory comments. They attempted to disguise the file as a Zoom recording, intending to use it as leverage to negotiate a more favorable severance package.

Below, is a high-level summary of how the investigation unfolded from the investigator’s perspective.

| Stage | Description |

| Potential Risk Indicator, Aggregation | AI transcription website – Fireflies.ai (http://Fireflies.ai) offers transcription, summarization, search, and analysis of voice conversations, including publishing summaries via unsecured links. It can record meetings as a bot, integrating with video conferencing platforms, but requires permission to join. |

| Aggregation | Archived sensitive data – Investigation into the file origins revealed they were initially copied from a folder labeled as sensitive. Interestingly, despite the folder’s designation, the files themselves lacked any sensitive markings or classifications. |

| Potential Risk Indicator | Personal webmail – Many use personal email for webmail and online services. LinkedIn activity, though not explicitly linked to an email address, suggests personal email was used for login. Acquired personal emails can be used to find other online services. |

| Potential Risk Indicator | Flight risk – The data analysis revealed no job applications submitted by the employee, nor any evidence of activity on other job sites. Analysts suspect that the employee may have visited a recruitment site but did not submit any applications using their corporate device. Given the low-risk profile, investigators have assessed the individual as highly intelligent, adept at presenting themselves as ‘normal’ by refraining from engaging in significant activity on their corporate device. |

| Circumvention, Exfiltration | Interviews with colleagues revealed a previously unknown AI note-transcribing necklace worn by the user of interest. This device synced with their phone and cloud storage, potentially exposing sensitive corporate information and PII during all meetings. |

| Potential Risk Indicator | Data introduction – The user was seen engaging with a file that had been introduced into the environment through an external link. It is suspected that the file the user downloaded may serve as a basis for a discrimination lawsuit. |

| Obfuscation | File renamed – The user expertly renamed the file to match Zoom’s naming conventions. |

RECOMMENDED ACTIONS

DTEX InTERCEPT Detection Multipliers

To enhance detection visibility and monitoring within their environments, organizations can implement specific strategies using DTEX InTERCEPT.

- Sensitive Named Lists. Organizations should conduct an audit to identify their critical assets, often referred to as “crown jewels,” and subsequently update the named lists.

- Integrations and Data Feeds. These elements are crucial for insider risk practitioners, as they provide additional context to ongoing activities.

- HR Feeds. This encompasses various aspects, including user organization data, known leaver categorization, disabled account monitoring, and customer contractor tracking.

- File Classifications. Enhanced data classification support will assist organizations by integrating established classifications into the dataset, without imposing additional burdens.

- HTTP Inspection Filtering. Enabling the entire range of rules provides a much more definitive view of a user’s behaviors. HTTP inspection provides comprehensive context for effective monitoring and analyzing AI usage within the organization.

Early Detection and Mitigation

AI transcription websites and AI wearables

Monitoring AI websites and applications is crucial for mitigating security risks and seizing business opportunities. The fast-paced AI advancements necessitate ongoing tracking of new platforms to find tools that meet organisational goals, ensuring secure use.

Regular reviews assess benefits and risks, focusing on security, data privacy, and ethics, enabling informed integration decisions that protect data and promote ethical AI practices. Staying updated offers a competitive edge.

Plaud, a wearable AI assistant, exemplifies a trend including smart glasses. To facilitate a return-to-office strategy, organizations should establish a Personal Electronic Devices (PEDs) and Wearables Policy to define acceptable usage and bolster security.

Clipboard, PortAccessed, and file upload (HTTP Filtering) activity

Monitoring clipboard activity near sensitive files or AI usage is crucial for spotting unauthorized data pasting into AI platforms. The PortAccessed field is key for tracking uploads to generative AI websites. Vigilant monitoring of HTTP file uploads via keyword detection and threat hunting is essential. Regular audits of clipboard, PortAccessed, and HTTP activities, along with thorough employee data security training, greatly improve protection against AI-related threats.

Detect keywords and crown jewels

Protecting organizational assets requires identifying and classifying sensitive data and maintaining a critical resource inventory. A response plan, including an incident response team and investigation protocols, is crucial for managing flagged content. Special procedures for sensitive cases, like CSAM, necessitate immediate escalation to HR, legal, and law enforcement. Continuous improvement via training and policy updates ensures compliance and ethical AI use.

AI use policy and Awareness

Organizations must create clear AI usage policies and provide thorough employee training to mitigate AI risks. Policies should cover acceptable practices, ethical and legal issues, data privacy, bias prevention, transparency, and accountability. The use of AI note-taking tools, which may unintentionally share information via unsecured links, highlights the need for strict policies and awareness initiatives. The rise of wearable technology also presents significant risks to address in 2025.

Training should emphasize bias identification, data privacy understanding, and AI limitations. Data privacy policies must specifically tackle individual and organizational risks of sharing sensitive information on generative AI platforms. With the rapid adoption of AI technologies, employees must be well-informed about potential risks.

INVESTIGATION SUPPORT

For intelligence or investigations support, contact the DTEX i3 team. Extra attention should be taken when implementing behavioral indicators on large enterprise deployments.

RESOURCES

DTEX i3 2024 Insider Risk Investigations Report

Get Threat Advisory

Email Alerts