AI (Artificial Intelligence) has been dominating the news, even more than data breaches. It is most certainly an exciting time for automation and analytics, and we have already witnessed that the implications for security are industry changing. But just as AI-driven insights have the potential to provide monumental gains in operational efficiency and threat mitigation (AI-fatigue aside), generative AI (GenAI) tools also increase insider risk.

This is becoming more commonly understood, especially with recent headlines. The complexities of the security landscape and IT environment has made risk difficult enough to manage, but the rise of GenAI has taken the challenge to a whole new level.

In security, visibility and control are everything. For security teams and particularly those managing insider risk, understanding web application activity is critical to the mission. This is because any number of actions can lead to unauthorized sharing, use, or transfer of company data. It is vitally important that organizations take this seriously and figure out—before a data breach–what kind of security policies to put in place to address their unique risk posture.

Expanded inspection capabilities

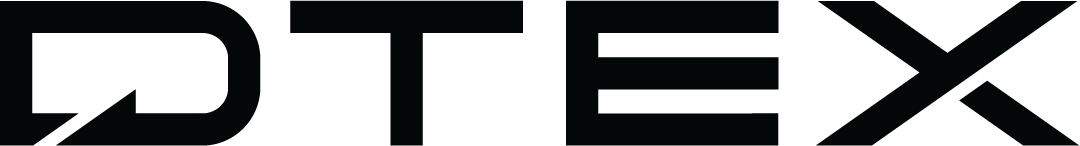

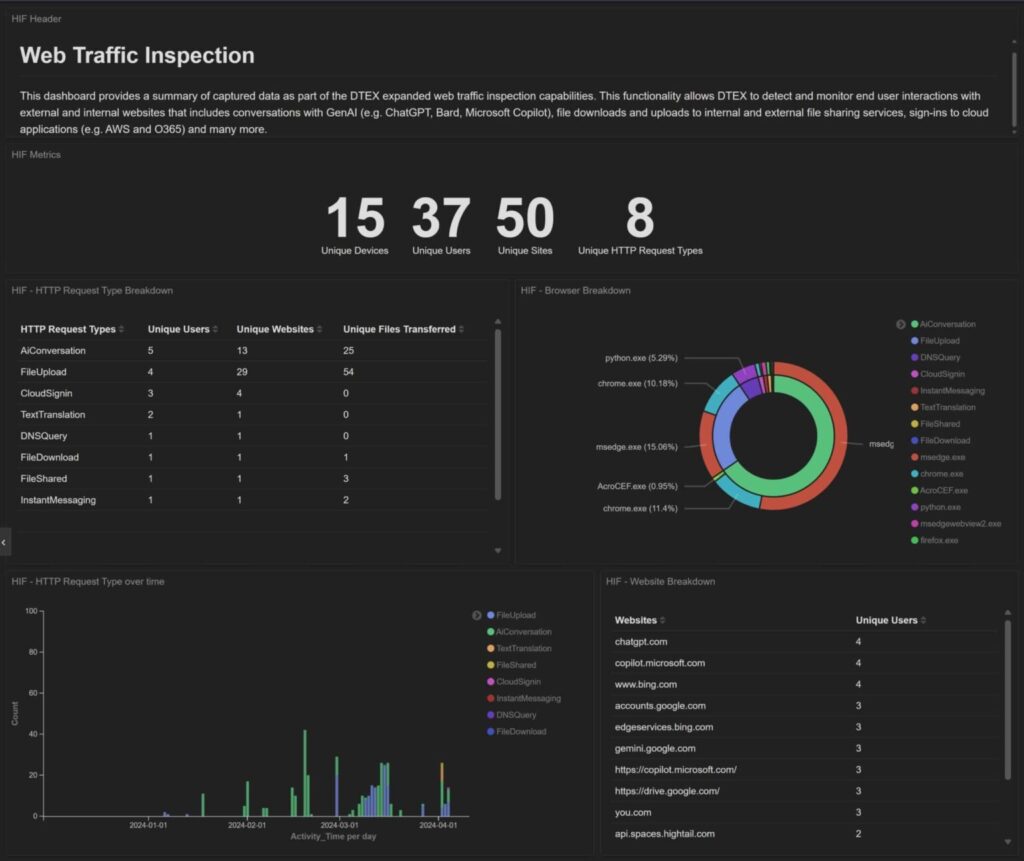

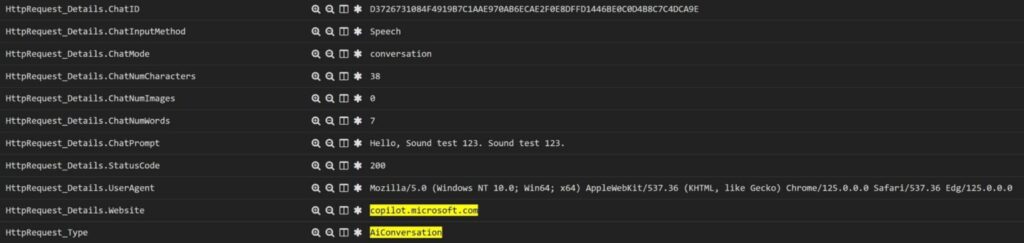

With all this in mind, DTEX has recently expanded its support for web traffic inspection, adding greater visibility into user activity while continuing to adhere to privacy standards. The InTERCEPTTM platform provides centralized policy enforcement that enables security teams to curate secure access to hundreds of GenAI applications, including differentiating between Microsoft Copilot enterprise accounts and personal usage. Analysts can then quickly gain a better understanding of user behavior like what web apps are being used and why employees are using them.

GenAI usage overview

Other attributes available from the inspection of interactions with Microsoft Copilot

Available in the DTEX Forwarder for Windows version 6.2.3 and later, DTEX InTERCEPT detects and monitors end user interactions with external and internal websites including:

- AI conversations with tools like ChatGPT, Bard, Microsoft Copilot, detailing sent prompts as well as uploaded file names and associated attributes

- Internal web apps such as internal document and work management systems and wiki collaboration tools that may not support comprehensive logging

- Sign-ins to cloud applications like Amazon Web Services and Microsoft O365

- File uploads to file sharing services

- File downloads from document management sites, detailing interaction with files or folders

- Source text copied into Google Translate with translated language ISO codes.

Building on previous support, HTTP Inspection Filtering (HIF) enables rule sets that define how DTEX inspects outbound HTTP traffic (both secure and non-secure) and controls what activities are reported. This gives analysts a better understanding of what content is going into GenAI tools and enables them to set and enforce policies for GenAI, including dynamically blocking or limiting access based on risk score and exhibiting careless behavior. An additional interesting use case is improving insights into activity on internal document management systems and collaboration wikis.

The risk is real

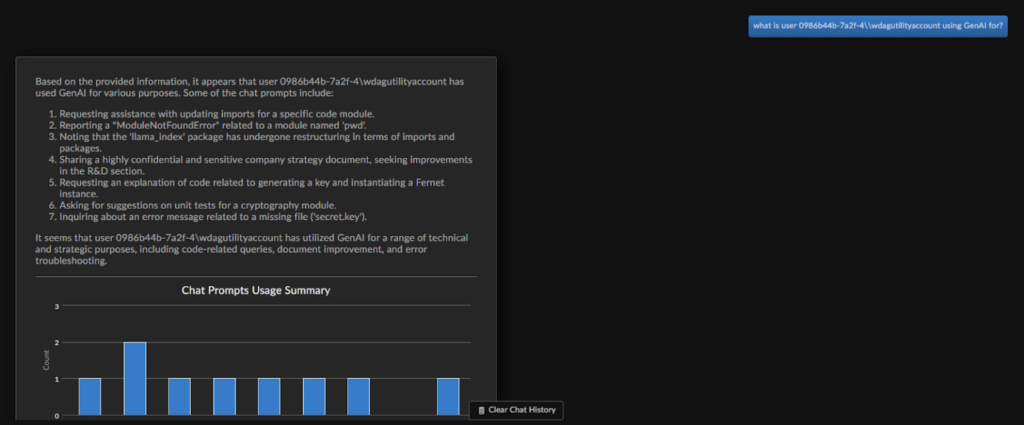

Remember Ai3? Back in February, DTEX announced the Ai³ Risk Assistant, designed to provide quick insight into the complicated nature of insider risk and behavioral intent. Ai³ uses the power of large language model (LLM) technology from Microsoft Azure Open AI but, unlike most AI assistants, it does not have direct access to the internet. Ai³ also continues to support the DTEX InTERCEPT platform’s ability to protect user identity and datasets through patented Pseudonymizationᵀᴹ techniques for data privacy requirements and to redact personally identifiable information (PII) where necessary, while keeping security teams informed. The point is, make sure your technology vendors care about data privacy and protection. You can tell when its core to product design.

Use Case: Source code data breach

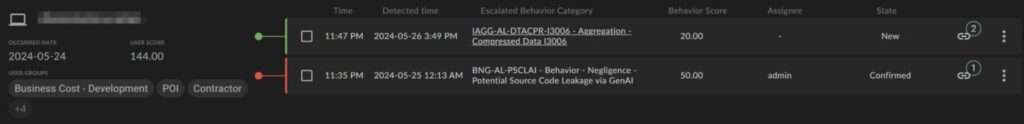

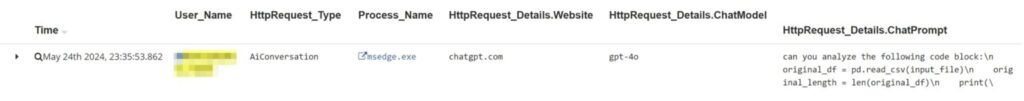

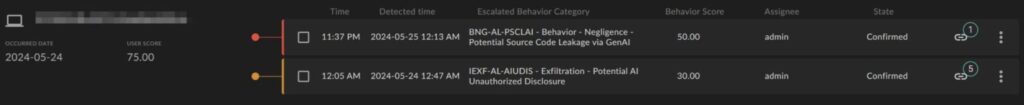

Based on the recent source code data breach referenced above, let’s take a deeper look into how DTEX uses AI to course correct risky behavior and at the same time safely expedite risk investigations. The InTERCEPT Alerts page shows that source code has been uploaded to ChatGPT including details of the event (examples 1 and 2 below). Ai³ assists analysts with questions like, “why is user X leveraging GenAI?”. Responses summarize a list of activity like ‘assistance with emails’ and ‘uploading code block’ that traditionally might take analysts over an hour to collect and understand (example 3 below). Combined, these AI capabilities provide granular detection data about GenAI tool usage and user behavior.

Example 1: Alert on user pasting source code directly into ChatGPT

Raw Activities

Example 2: Alert on user uploading a script to ChatGPT for analysis

Raw Activities

Example 3: Ai³ summarizing GenAI tool usage

Enter the infinite frontier

Admittedly there is a lot to sort out in terms of driving policy. Start with understanding your data (what is it, where is it, who is accessing it), your users (who are they, what are their roles, what are they doing), and the level of risk you are willing to take on (what is acceptable given your data and their roles). To be done effectively, you must understand behavior. The benefits are clear:

- Improve risk and compliance posture

- Govern and safeguard company intellectual property (IP)

- Extend visibility to fill the gaps

- And arguably the most important benefit of all, reducing the risk of data loss associated with GenAI tools.

The merging of human behavior with the speed and scale of AI opens an infinite frontier in insider risk management. Let us know if we can help. We have a no-cost risk assessment to help you better understand your current risk posture and to identify the gaps that you may not even know you have.

Subscribe today to stay informed and get regular updates from DTEX Systems