A common challenge organizations face is striking the balance between employee privacy and insider risk monitoring. Using pseudonymization, organizations can apply the principle of proportionality to monitor employees based on the nature of the risk they pose.

This blog provides a deep dive on the differences between anonymization and pseudonymization, and how pseudonymization affords the ability to tailor employee monitoring in a way that is proportionate and fit for purpose.

Understanding Pseudonymization and Anonymization

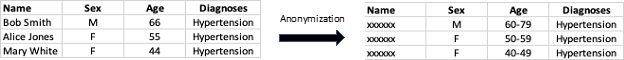

Anonymization

With anonymization, data is anonymized by removing or encrypting all identifiers to an individual. This maintains the privacy of the individual while still allowing the data to be used for other purposes. In the example below, anonymization simply removes names from the fields.

Researchers commonly use anonymization to depersonalize personal information before processing it for statistical purposes. For example, a healthcare organization may wish to share information about groups of patients with a pharmaceutical company. The pharmaceutical company needs specific data on the individual patients, including test results or diagnoses, age, weight, gender, and other health factors. They do not require the patients’ names, social security numbers, phone numbers, email addresses, or other data that could link a patient to a record. Anonymization strips personal information from the data required for research.

While useful in the example above, anonymization is not an ideal solution for insider risk management. Behavior analytics can help by baselining a group of people with similar roles, but when anomalies occur, it helps to know precisely which user is performing those actions. Simply knowing it was one of a group of hundreds in a similar role won’t prevent data loss. Similarly, blocking all users in that group unnecessarily disrupts legitimate work.

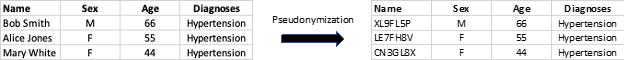

Pseudonymization

The EU’s General Data Protection Regulation (GDPR) encourages the use of pseudonymization and refers to it as “a central feature of ‘data protection by design’”. The GDPR text defines pseudonymization as:

“…the processing of personal data in such a manner that the personal data can no longer be attributed to a specific data subject without the use of additional information…”

Pseudonymization replaces private identifiers with pseudonyms or artificial identifiers. For example, a user’s name could be replaced by a unique random string. This protects the user’s identity while the underlying data remains suitable for data analysis and data processing.

Unlike anonymization, pseudonymization is reversable when necessary. The identifiers are unique and can be looked up in a separate database to identify the associated individual. This is the “additional information” referenced in the definition above. It is critical that organizations maintain these databases separately and protect them from unauthorized access.

Pseudonymization is useful in defending against insider risk. It allows tracking of individuals, while removing personal identifiers eliminates the risk of inherent bias and protects the privacy of workers. The ability to reverse pseudonymization – when warranted – allows risk teams to identify and stop individuals putting data at risk.

Pseudonymization Applies the Principle of Proportionality

DTEX’s patented Pseudonymization technique within the InTERCEPT platform enables tailored metadata collection, allowing government and enterprise entities to meet various use cases, including:

Meeting GDPR and CCPA compliance, and respecting employee privacy

The DTEX InTERCEPT platform collects the minimum amount of metadata needed to build a forensic audit-trail, significantly reducing the amount of intrusive data sources that are unnecessary for improving security. The platform applies its patented Pseudonymization technique to tokenize PII across raw data fields, including username, email, IP address, domain name, and device name. This enables organizations to identify high risk events without infringing the privacy of individuals and complying with GDPR and CCPA.

Meeting CNSSD 504 and escalating malicious investigations with justifiable cause

When observed indicators of malicious intent warrant unmasking personal identities – with a clear, evidentiary quality audit trail – it requires “dual authorization.” Two DTEX administrators (typically one from security and one from HR or legal) must agree to the unmasking and provide justification.

DTEX helps hundreds of organizations worldwide better understand their workforce, make effective security investments, and comply with regulatory requirements while keeping data private and protected. Read our Data Privacy and Protection Solution Brief to learn more.

Subscribe today to stay informed and get regular updates from DTEX Systems