With new AI tools being introduced all the time, AI misuse and abuse is a growing concern with 92% of organizations identifying internal use of AI tools as a key security concern. It’s critical to keep up with the risk, especially given the fast pace of innovation, the focus on productivity gains and the need to protect intellectual property and other sensitive data.

Nearly a year ago, DTEX announced expanded support for web traffic inspection, in part to capture employee use of generative AI tools and provide greater visibility into those interactions. The InTERCEPTTM platform enables security teams to curate secure access to hundreds of GenAI applications, providing analysts a better understanding of what web apps are being used and why employees are using them. DTEX enables customers to gain:

- Detailed visibility into organization-wide AI tool use

- Granular tracking of data flows to and from AI applications

- Differentiation between corporate and personal AI accounts

- Real-time prevention of data exfiltration to unauthorized AI tools

- Detection and monitoring of AI-generated content

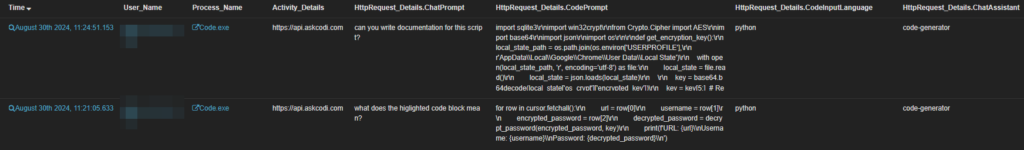

And DTEX not only captures information about GenAI tool use for all known and unknown browsers but also for non-browser utilities like Visual Studio, Continue and AskCodi, AI-powered code completion tools that help developers by suggesting code snippets.

The Growing Risk of GenAI Tools

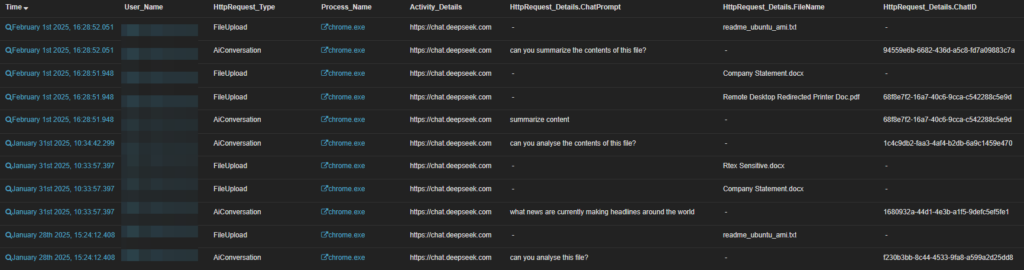

Lately, we have been hearing more from our customers about users accessing DeepSeek, an artificial intelligence lab and startup based in China. If you didn’t see all the headlines, DeepSeek is designed to rival top generative AI models like ChatGPT, in part because it uses a “mixture-of-experts” architecture that makes it more efficient (and cost effective) but with the benefits come concerns about the potential risks of relying on AI technology developed by a Chinese company, which could pose national security threats-think TikTok.

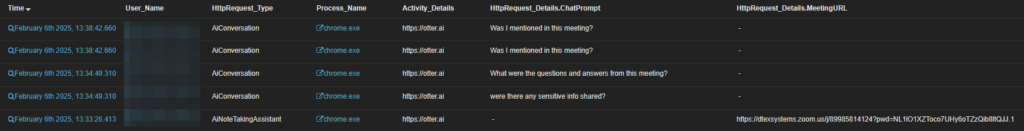

We have also been having conversations with DTEX users about AI notetaking tools used during video conference calls like Zoom, Google Meet or Microsoft Teams meetings. AI notetaking tools create transcripts, videos, knowledge graphs and other content. These assistants, while they can be a leg up in terms of productivity, are clearly an emerging risk, capturing sensitive information which can lead to a gap in visibility of potential data leaks. It is very worthwhile tracking what tools are in use within your organization, including when employees are participating in meetings with vendors or partners that leverage AI note taking tools. Blocking these tools is certainly an option but it’s much harder to control when third parties decide to use them. Monitoring and focusing on educating employees are good ways to raise awareness.

How DTEX Insider Risk Management Can Help

DTEX HTTP Inspection Filtering (HIF) enables rule sets that define how DTEX inspects outbound HTTP traffic (both secure and non-secure) and controls what activities are reported. This gives analysts a better understanding of what content is going into GenAI tools and enables them to set and enforce policies for GenAI, including dynamically blocking or limiting access based on risk score and careless behavior.

Other security vendors have come to market with similar sounding capabilities, but they often have significant limitations because with browser-based implementations, they can miss things that happen outside of the supported web browser. Additionally, the browser plugins need constant updating. As I mentioned above, DTEX captures information about GenAI tool use for all known and unknown browsers as well as non-browser utilities, important for an organization-wide understanding of GenAI risk. We provide coverage for command-line utilities (ex. cmd.exe and powershell.exe) and IDEs with current inspection rules.

Support for non-browser utilities

Below is an example of using the Askcodi AI assistant in MS Visual Studio.

Looking for DeepSeek

DTEX has added DeepSeek and AI notetaking assistants to the upcoming release of the HTTP inspection rules so our customers can monitor if and where these tools are popping up.

Usage of AI notetaking assistants

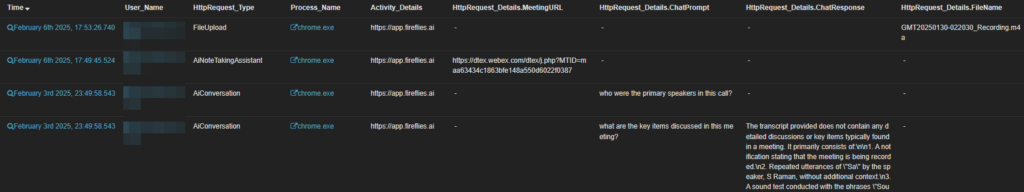

With AI notetaking, data includes the meeting URL that the assistant is joining.

AI Notetaking Assistant Chats

Something to keep in mind, AI notetaking assistants are capable of chatting like ChatGPT. We capture that too, as well as the response back.

This can help flag sensitive info begin summarized or presented to the end user.

As I’ve said before, in security, visibility and control are everything. For security teams and particularly those managing insider risk, understanding web application activity is critical to risk adaptive protection. This is because any number of actions can lead to unauthorized sharing, use, or transfer of company data. It is vitally important that organizations take this seriously and figure out—before a data breach–what kind of security policies to put in place to address their unique risk posture.

Check out my blog from last year about how DTEX InTERCEPT can help capture GenAI usage and drive policy. Learn more about managing insider risk related to AI misuse and abuse here or schedule a demo to see how DTEX can help.

Subscribe today to stay informed and get regular updates from DTEX Systems